OrderedDict([(UUID('acc73dc0-d5ae-499e-8cc4-63f70f2d935f'),

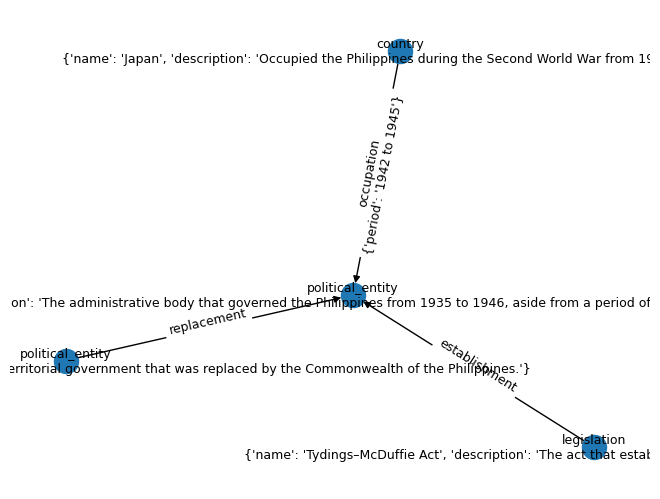

{'nodes': {'semantic_id': 'insular_government',

'category': 'political_entity',

'attributes': {'name': 'Insular Government',

'description': 'A United States territorial government that was replaced by the Commonwealth of the Philippines.'}}}),

(UUID('38d26bc0-e096-4524-945a-77b9e4ae0f49'),

{'nodes': {'semantic_id': 'commonwealth_of_the_philippines',

'category': 'political_entity',

'attributes': {'name': 'Commonwealth of the Philippines',

'years_active': '1935 to 1946',

'description': 'The administrative body that governed the Philippines during this period, except for a period of exile from 1942 to 1945 when Japan occupied the country.'}}}),

(UUID('9555e806-1679-4bc0-99d7-55717f21bdef'),

{'nodes': {'semantic_id': 'tydings_mcduffie_act',

'category': 'legal_document',

'attributes': {'name': 'Tydings–McDuffie Act',

'description': "The act that established the Commonwealth of the Philippines as a transitional administration in preparation for the country's full achievement of independence."}}}),

(UUID('90d9b404-119e-4b31-a0cf-ad102105687f'),

{'edges': {'from_node': UUID('acc73dc0-d5ae-499e-8cc4-63f70f2d935f'),

'to_node': UUID('38d26bc0-e096-4524-945a-77b9e4ae0f49'),

'category': 'replaced'}}),

(UUID('2cdca930-c9f5-4d65-8da0-4fcc351ac2d0'),

{'edges': {'from_node': UUID('9555e806-1679-4bc0-99d7-55717f21bdef'),

'to_node': UUID('38d26bc0-e096-4524-945a-77b9e4ae0f49'),

'category': 'established'}}),

(UUID('c7120f30-4152-4e88-bec1-698bfdd2d5e1'),

{'nodes': {'semantic_id': 'lake_oesa',

'category': 'natural_feature',

'attributes': {'name': 'Lake Oesa',

'elevation': 2267,

'elevation_unit': 'm',

'location': {'park': 'Yoho National Park',

'city': 'Field',

'province': 'British Columbia',

'country': 'Canada'}}}}),

(UUID('7c83cf46-05fc-491d-9667-20acf68fe70f'),

{'nodes': {'semantic_id': 'arafura_swamp',

'category': 'natural_feature',

'attributes': {'name': 'Arafura Swamp',

'type': 'inland freshwater wetland',

'location': {'region': 'Arnhem Land',

'territory': 'Northern Territory',

'country': 'Australia'},

'size': {'area': {'value': None, 'unit': 'km2'},

'expansion_during_wet_season': True},

'description': 'a near pristine floodplain, possibly the largest wooded swamp in the Northern Territory and Australia',

'cultural_significance': 'of great cultural significance to the Yolngu people, in particular the Ramingining community',

'filming_location': 'Ten Canoes'}}}),

(UUID('f39070c7-1d59-4d1b-a4a4-c8a18c222f85'),

{'nodes': {'semantic_id': 'wapizagonke_lake',

'category': 'natural_feature',

'attributes': {'name': 'Wapizagonke Lake',

'type': 'body of water',

'location': {'sector': 'Lac-Wapizagonke',

'city': 'Shawinigan',

'park': 'La Mauricie National Park',

'region': 'Mauricie',

'province': 'Quebec',

'country': 'Canada'}}}}),

(UUID('9a8a31e6-d311-4085-845d-48ae33707b51'),

{'nodes': {'semantic_id': 'amursky_district',

'category': 'administrative_district',

'attributes': {'name': 'Amursky District',

'country': 'Russia',

'region': 'Khabarovsk Krai'}}}),

(UUID('f85fb9ae-7e0f-46b2-b039-63ee01e6ce5d'),

{'nodes': {'semantic_id': 'khabarovsky_district',

'category': 'administrative_district',

'attributes': {'name': 'Khabarovsky District',

'country': 'Russia',

'region': 'Khabarovsk Krai',

'area': None,

'area_unit': None,

'administrative_center': 'Khabarovsk'}}}),

(UUID('21425234-233f-4f40-b6b3-98e818755151'),

{'edges': {'from_node': UUID('f85fb9ae-7e0f-46b2-b039-63ee01e6ce5d'),

'to_node': UUID('9a8a31e6-d311-4085-845d-48ae33707b51'),

'category': 'separated_by'}}),

(UUID('43464202-f216-469b-94d2-8ca7ad3d92f1'),

{'nodes': {'semantic_id': 'silver_lake',

'category': 'natural_feature',

'attributes': {'name': 'Silver Lake',

'type': 'body of water',

'location': {'county': 'Cheshire County',

'state': 'New Hampshire',

'country': 'United States',

'towns': ['Harrisville', 'Nelson']},

'outflows': ['Minnewawa Brook', 'The Branch'],

'ultimate_recipient': 'Connecticut River'}}}),

(UUID('f938374c-ec1e-49b0-b049-91257d6ae64d'),

{'nodes': {'semantic_id': 'hyderabad_police_area',

'category': 'administrative_district',

'attributes': {'name': 'Hyderabad Police area',

'jurisdiction_size': 'smallest'}}}),

(UUID('595a2f04-abae-4845-b45c-6df6a8ed9ab5'),

{'nodes': {'semantic_id': 'hyderabad_district',

'category': 'administrative_district',

'attributes': {'name': 'Hyderabad district',

'jurisdiction_size': 'second_smallest'}}}),

(UUID('b3beb831-6954-4ca9-87f3-052767e60856'),

{'edges': {'from_node': UUID('f938374c-ec1e-49b0-b049-91257d6ae64d'),

'to_node': UUID('595a2f04-abae-4845-b45c-6df6a8ed9ab5'),

'category': 'jurisdiction_size_hierarchy'}}),

(UUID('252323ba-7590-4b55-ae43-e6b9da12ff9e'),

{'edges': {'from_node': UUID('595a2f04-abae-4845-b45c-6df6a8ed9ab5'),

'to_node': UUID('631d3937-3f47-4598-8f45-bdb90d5eb91f'),

'category': 'jurisdiction_size_hierarchy'}}),

(UUID('723c0ccc-8f09-43ad-bbcf-97b08d5c1bd8'),

{'edges': {'from_node': UUID('631d3937-3f47-4598-8f45-bdb90d5eb91f'),

'to_node': UUID('4c681f75-6f72-4771-831a-4aa16149195a'),

'category': 'jurisdiction_size_hierarchy'}}),

(UUID('4c681f75-6f72-4771-831a-4aa16149195a'),

{'nodes': {'semantic_id': 'hmda_area',

'category': 'administrative_district',

'attributes': {'name': 'Hyderabad Metropolitan Development Authority (HMDA) area',

'jurisdiction_size': 'largest',

'type': 'urban_planning_agency',

'apolitical': True,

'covers': ['ghmc_area', 'suburbs_of_ghmc_area']}}}),

(UUID('631d3937-3f47-4598-8f45-bdb90d5eb91f'),

{'nodes': {'semantic_id': 'ghmc_area',

'category': 'administrative_district',

'attributes': {'name': 'GHMC area',

'jurisdiction_size': 'second_largest',

'alternate_name': 'Hyderabad city'}}}),

(UUID('90c5ce53-79aa-4aaf-a27d-7400e6ac1c08'),

{'nodes': {'semantic_id': 'suburbs_of_ghmc_area',

'category': 'administrative_district',

'attributes': {'name': 'Suburbs of GHMC area',

'jurisdiction_size': 'medium',

'type': 'residential'}}}),

(UUID('9a4b69f8-749a-4b7c-a3e3-e2db4f3823d1'),

{'nodes': {'semantic_id': 'hmwssb',

'category': 'administrative_body',

'attributes': {'name': 'Hyderabad Metropolitan Water Supply and Sewerage Board',

'type': 'water_management'}}}),

(UUID('c48817dd-b382-4db9-ad18-d3e2190cdcf5'),

{'edges': {'from_node': UUID('4c681f75-6f72-4771-831a-4aa16149195a'),

'to_node': UUID('631d3937-3f47-4598-8f45-bdb90d5eb91f'),

'category': 'jurisdiction_size_hierarchy'}}),

(UUID('4b64fe19-941d-44ce-8cd0-8f94f503dea5'),

{'edges': {'from_node': UUID('4c681f75-6f72-4771-831a-4aa16149195a'),

'to_node': UUID('90c5ce53-79aa-4aaf-a27d-7400e6ac1c08'),

'category': 'jurisdiction_size_hierarchy'}}),

(UUID('268f7e8f-a2c7-4a83-94b9-24baeca1be73'),

{'edges': {'from_node': UUID('4c681f75-6f72-4771-831a-4aa16149195a'),

'to_node': UUID('9a4b69f8-749a-4b7c-a3e3-e2db4f3823d1'),

'category': 'manages'}}),

(UUID('7956f84b-20a8-4836-ae7a-c7311d716cd1'),

{'nodes': {'semantic_id': 'san_juan',

'category': 'city',

'attributes': {'name': 'San Juan',

'location': {'country': 'Puerto Rico',

'region': 'north-eastern coast'},

'borders': {'north': 'Atlantic Ocean',

'south': ['Caguas', 'Trujillo Alto'],

'east': ['Carolina'],

'west': ['Guaynabo']},

'area': {'value': 76.93, 'unit': 'square miles'},

'water_bodies': ['San Juan Bay',

'Condado Lagoon',

'San José Lagoon'],

'water_area': {'value': 29.11,

'unit': 'square miles',

'percentage': 37.83}}}}),

(UUID('75abfc38-a7ea-4db7-9c2b-5dddaf51c493'),

{'nodes': {'semantic_id': 'urban_hinterland',

'category': 'administrative_district',

'attributes': {'name': 'Urban hinterland',

'type': 'urban_area'}}}),

(UUID('6f12633f-2502-4467-a29c-ebe5b0699810'),

{'nodes': {'semantic_id': 'kreisfreie_stadte',

'category': 'administrative_district',

'attributes': {'name': 'Kreisfreie Städte',

'type': 'district-free_city_or_town'}}}),

(UUID('e7748608-55fe-4ad2-b17a-83af70f4fc73'),

{'nodes': {'semantic_id': 'landkreise_amalgamation',

'category': 'administrative_district',

'attributes': {'name': 'Local associations of a special kind',

'type': 'amalgamation_of_districts',

'purpose': 'simplification_of_administration'}}}),

(UUID('0114478c-292d-43e4-940c-349f0b8d8060'),

{'edges': {'from_node': UUID('6f12633f-2502-4467-a29c-ebe5b0699810'),

'to_node': UUID('75abfc38-a7ea-4db7-9c2b-5dddaf51c493'),

'category': 'grouping'}}),

(UUID('9c504b5a-98b9-4620-9d56-0c18fe35005f'),

{'edges': {'from_node': UUID('e7748608-55fe-4ad2-b17a-83af70f4fc73'),

'to_node': UUID('75abfc38-a7ea-4db7-9c2b-5dddaf51c493'),

'category': 'comprises'}}),

(UUID('6b27c2fc-9694-4f6e-b61c-425360f1c8f7'),

{'edges': {'from_node': UUID('e7748608-55fe-4ad2-b17a-83af70f4fc73'),

'to_node': UUID('6f12633f-2502-4467-a29c-ebe5b0699810'),

'category': 'comprises'}}),

(UUID('7715b916-5807-45f4-8408-2770897a7581'),

{'nodes': {'semantic_id': 'norfolk_island',

'category': 'island',

'attributes': {'name': 'Norfolk Island',

'location': {'ocean': 'South Pacific Ocean',

'relative_location': 'east of Australian mainland'},

'coordinates': {'latitude': -29.033, 'longitude': 167.95},

'area': {'value': 34.6, 'unit': 'square kilometres'},

'coastline': {'length': 32, 'unit': 'km'},

'highest_point': 'Mount Bates'}}}),

(UUID('0db9008a-ae7e-4e32-a7b3-ae5c7d8f93d2'),

{'edges': {'from_node': UUID('7715b916-5807-45f4-8408-2770897a7581'),

'to_node': UUID('48a3d3e7-34c1-4a64-93ba-72a6108b3e57'),

'category': 'part_of'}}),

(UUID('48a3d3e7-34c1-4a64-93ba-72a6108b3e57'),

{'nodes': {'semantic_id': 'phillip_island',

'category': 'island',

'attributes': {'name': 'Phillip Island',

'location': {'relation': 'second largest island of the territory',

'coordinates': {'latitude': -29.117, 'longitude': 167.95},

'distance_from_main_island': {'value': 7,

'unit': 'kilometres'}}}}}),

(UUID('52ec31ee-9fc6-42a1-9e6c-daf0ea0a9390'),

{'edges': {'from_node': UUID('48a3d3e7-34c1-4a64-93ba-72a6108b3e57'),

'to_node': UUID('7715b916-5807-45f4-8408-2770897a7581'),

'category': 'part_of'}}),

(UUID('08f207c1-6915-4237-ac4e-902815d9cfae'),

{'nodes': {'semantic_id': 'star_stadium',

'category': 'stadium',

'attributes': {'name': 'Star (Zvezda) Stadium',

'former_name': 'Lenin Komsomol Stadium',

'location': {'city': 'Perm', 'country': 'Russia'},

'usage': 'football matches',

'home_team': 'FC Amkar Perm',

'capacity': 17000,

'opened': '1969-06-05'}}}),

(UUID('5be79bf7-cd2a-487f-8833-36ae11257df8'),

{'nodes': {'semantic_id': 'perm',

'category': 'city',

'attributes': {'name': 'Perm',

'location': {'river': 'Kama River',

'region': 'Perm Krai',

'country': 'Russia',

'geography': 'European part of Russia near the Ural Mountains'},

'administrative_status': 'administrative centre'}}}),

(UUID('e9a848ba-35b3-42e4-b9e6-aa0ea8651d92'),

{'edges': {'from_node': UUID('08f207c1-6915-4237-ac4e-902815d9cfae'),

'to_node': UUID('5be79bf7-cd2a-487f-8833-36ae11257df8'),

'category': 'located_in'}}),

(UUID('57c8adca-9dcc-4257-be85-bfb48eacd310'),

{'nodes': {'semantic_id': 'paea',

'category': 'municipality',

'attributes': {'name': 'Paea',

'location': {'island': 'Tahiti',

'subdivision': 'Windward Islands',

'region': 'Society Islands',

'country': 'French Polynesia',

'territory': 'France'},

'population': 13021}}}),

(UUID('62866902-6285-4c38-98b0-7496dbe73fd3'),

{'nodes': {'semantic_id': 'potamogeton_amplifolius',

'category': 'plant',

'attributes': {'common_names': ['largeleaf pondweed',

'broad-leaved pondweed'],

'habitat': ['lakes', 'ponds', 'rivers'],

'water_depth': 'often in deep water',

'distribution': 'North America'}}}),

(UUID('0da6e66c-64c6-4155-bb6f-88e1b0c9a349'),

{'nodes': {'semantic_id': 'soltonsky_district',

'category': 'administrative_district',

'attributes': {'name': 'Soltonsky District',

'location': {'region': 'Altai Krai', 'country': 'Russia'}}}}),

(UUID('2bdd9668-a653-4815-bcde-f43dcf5ff4a5'),

{'nodes': {'semantic_id': 'krasnogorsky_district',

'category': 'administrative_district',

'attributes': {'name': 'Krasnogorsky District',

'location': {'region': 'Altai Krai', 'country': 'Russia'}}}}),

(UUID('e9620276-009f-4f5a-99b6-b41d93fbe791'),

{'nodes': {'semantic_id': 'sovetsky_district',

'category': 'administrative_district',

'attributes': {'name': 'Sovetsky District',

'location': {'region': 'Altai Krai', 'country': 'Russia'}}}}),

(UUID('4bdbb329-0688-4621-9c67-e1af0e8d57fe'),

{'nodes': {'semantic_id': 'smolensky_district',

'category': 'administrative_district',

'attributes': {'name': 'Smolensky District',

'location': {'region': 'Altai Krai', 'country': 'Russia'}}}}),

(UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

{'nodes': {'semantic_id': 'biysky_district',

'category': 'administrative_district',

'attributes': {'name': 'Biysky District',

'location': {'region': 'Altai Krai',

'country': 'Russia',

'geography': 'east of the krai'},

'administrative_status': 'administrative and municipal district (raion)',

'bordering_districts': ['Soltonsky_district',

'Krasnogorsky_district',

'Sovetsky_district',

'Smolensky_district',

'City_of_Biysk']}}}),

(UUID('a375ae9a-9282-4916-9e6b-02da8a824e3f'),

{'nodes': {'semantic_id': 'city_of_biysk',

'category': 'city',

'attributes': {'name': 'Biysk',

'location': {'region': 'Altai Krai', 'country': 'Russia'},

'administrative_status': 'administrative center'}}}),

(UUID('6674891e-b1e6-4c85-9821-54b4a7fd923a'),

{'edges': {'from_node': UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

'to_node': UUID('0da6e66c-64c6-4155-bb6f-88e1b0c9a349'),

'category': 'bordering'}}),

(UUID('5f81b958-60c6-40a5-8bea-9fdacae9f671'),

{'edges': {'from_node': UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

'to_node': UUID('2bdd9668-a653-4815-bcde-f43dcf5ff4a5'),

'category': 'bordering'}}),

(UUID('8077d42e-5e70-44dd-aade-c764087f139f'),

{'edges': {'from_node': UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

'to_node': UUID('e9620276-009f-4f5a-99b6-b41d93fbe791'),

'category': 'bordering'}}),

(UUID('abe4eb3e-0a01-48f2-b97d-67a30519a4d3'),

{'edges': {'from_node': UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

'to_node': UUID('4bdbb329-0688-4621-9c67-e1af0e8d57fe'),

'category': 'bordering'}}),

(UUID('a27a6ceb-536d-4dbe-9798-e5d86e9755c6'),

{'edges': {'from_node': UUID('d3fca76e-6cb9-47b7-8fdf-282cd0de4bee'),

'to_node': UUID('a375ae9a-9282-4916-9e6b-02da8a824e3f'),

'category': 'bordering'}}),

(UUID('3d2af122-d4b9-47f1-a034-c9f23e262e14'),

{'nodes': {'semantic_id': 'contoocook_lake',

'category': 'lake',

'attributes': {'name': 'Contoocook Lake',

'location': {'county': 'Cheshire County',

'state': 'New Hampshire',

'country': 'United States',

'towns': ['Jaffrey', 'Rindge']},

'connection': {'to': 'pool_pond',

'type': 'forms_headwaters_of'},

'outflow': {'to': 'contoocook_river', 'direction': 'north'},

'outflow_destination': 'merrimack_river'}}}),

(UUID('1eafa7a8-a830-4472-8b32-c071159c8140'),

{'nodes': {'semantic_id': 'pool_pond',

'category': 'lake',

'attributes': {'name': 'Pool Pond',

'connection': {'to': 'contoocook_lake',

'type': 'forms_headwaters_of'}}}}),

(UUID('f841df5f-a4ff-4a6b-8656-1458252aca37'),

{'edges': {'from_node': UUID('3d2af122-d4b9-47f1-a034-c9f23e262e14'),

'to_node': UUID('1eafa7a8-a830-4472-8b32-c071159c8140'),

'category': 'forms_headwaters_of'}}),

(UUID('d50d66d9-c89d-4c0a-9c61-fa4e856ab2c2'),

{'edges': {'from_node': UUID('3d2af122-d4b9-47f1-a034-c9f23e262e14'),

'to_node': UUID('56ae1a37-74f4-486b-b517-34b99027ba36'),

'category': 'outflows_to'}}),

(UUID('56ae1a37-74f4-486b-b517-34b99027ba36'),

{'nodes': {'semantic_id': 'contoocook_river',

'category': 'river',

'attributes': {'name': 'Contoocook River',

'flow_direction': 'north',

'outflow_destination': 'merrimack_river'}}}),

(UUID('0c92123d-fbc3-47e7-b752-65df1d6680c0'),

{'nodes': {'semantic_id': 'merrimack_river',

'category': 'river',

'attributes': {'name': 'Merrimack River',

'location': {'city': 'Penacook',

'state': 'New Hampshire',

'country': 'United States'}}}}),

(UUID('6e9b6a2d-926e-4b20-97ab-d8bb217a5029'),

{'edges': {'from_node': UUID('56ae1a37-74f4-486b-b517-34b99027ba36'),

'to_node': UUID('0c92123d-fbc3-47e7-b752-65df1d6680c0'),

'category': 'flows_into'}}),

(UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

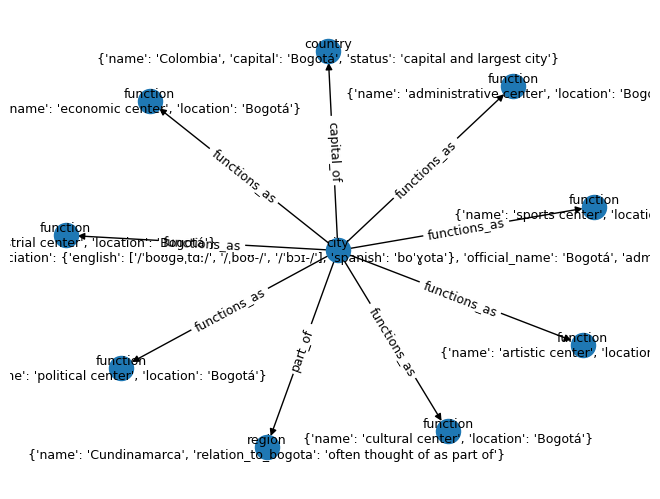

{'nodes': {'semantic_id': 'bogota',

'category': 'city',

'attributes': {'name': 'Bogotá',

'pronunciation': {'english': ['/ˈboʊɡəˌtɑː/',

'/ˌboʊ-/',

'/ˈbɔɪ-/'],

'spanish': 'boˈɣota'},

'official_name': 'Bogotá',

'administration': 'Capital District'}}}),

(UUID('adc293cf-401b-49b6-928d-ada04da0e4bf'),

{'nodes': {'semantic_id': 'political_center',

'category': 'function',

'attributes': {'name': 'political center',

'location': 'Bogotá'}}}),

(UUID('108added-3b0f-4b67-9e28-fce89a168e46'),

{'nodes': {'semantic_id': 'economic_center',

'category': 'function',

'attributes': {'name': 'economic center',

'location': 'Bogotá'}}}),

(UUID('7fad3f22-d955-4f54-a1e2-5683bf4639e8'),

{'nodes': {'semantic_id': 'administrative_center',

'category': 'function',

'attributes': {'name': 'administrative center',

'location': 'Bogotá'}}}),

(UUID('404e8a42-e9e9-4081-9890-669ba48d1b36'),

{'nodes': {'semantic_id': 'industrial_center',

'category': 'function',

'attributes': {'name': 'industrial center',

'location': 'Bogotá'}}}),

(UUID('8b80ba4f-77b2-4ee6-8548-a66108963fb7'),

{'nodes': {'semantic_id': 'artistic_center',

'category': 'function',

'attributes': {'name': 'artistic center',

'location': 'Bogotá'}}}),

(UUID('056f1544-f292-4506-ba8d-18d6e294d433'),

{'nodes': {'semantic_id': 'cultural_center',

'category': 'function',

'attributes': {'name': 'cultural center',

'location': 'Bogotá'}}}),

(UUID('d747fcf6-5b60-4595-a127-f3e247cbb8a3'),

{'nodes': {'semantic_id': 'sports_center',

'category': 'function',

'attributes': {'name': 'sports center',

'location': 'Bogotá'}}}),

(UUID('59719cce-5c68-47cf-8111-481c74e73c3b'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('8ad7ae8e-ed3a-415f-8d0e-a8984bd7717e'),

'category': 'capital_of'}}),

(UUID('0bc7053b-2183-41df-995a-faecd919ad45'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('5576789f-9306-4a82-8736-882db81abdf2'),

'category': 'part_of'}}),

(UUID('73230839-050b-4ace-9dd1-7927b1bd1034'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('adc293cf-401b-49b6-928d-ada04da0e4bf'),

'category': 'functions_as'}}),

(UUID('04b8ded0-c2c1-441f-b7f7-069b05f80338'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('108added-3b0f-4b67-9e28-fce89a168e46'),

'category': 'functions_as'}}),

(UUID('0a86d51a-bd57-4a63-b494-08075a2bcb4a'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('7fad3f22-d955-4f54-a1e2-5683bf4639e8'),

'category': 'functions_as'}}),

(UUID('58c0a891-5c05-48e5-ba2a-1c230b588997'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('404e8a42-e9e9-4081-9890-669ba48d1b36'),

'category': 'functions_as'}}),

(UUID('5bad3267-dceb-4067-8d02-bc8c748d50d3'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('8b80ba4f-77b2-4ee6-8548-a66108963fb7'),

'category': 'functions_as'}}),

(UUID('d80bed21-cd68-454d-a142-223fb30d5db6'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('056f1544-f292-4506-ba8d-18d6e294d433'),

'category': 'functions_as'}}),

(UUID('6c12a6ad-244d-4d7e-8c25-f3a6288967fb'),

{'edges': {'from_node': UUID('2de55d21-12b4-493e-954a-acf0b7bf4ac2'),

'to_node': UUID('d747fcf6-5b60-4595-a127-f3e247cbb8a3'),

'category': 'functions_as'}}),

(UUID('87517804-e5a2-44d9-82bd-23b4e33c2a40'),

{'nodes': {'semantic_id': 'bogota',

'category': 'city',

'attributes': {'name': 'Bogotá',

'functions': ['political center',

'economic center',

'administrative center',

'industrial center',

'artistic center',

'cultural center',

'sports center']}}}),

(UUID('8ad7ae8e-ed3a-415f-8d0e-a8984bd7717e'),

{'nodes': {'semantic_id': 'colombia',

'category': 'country',

'attributes': {'name': 'Colombia',

'capital': 'Bogotá',

'status': 'capital and largest city'}}}),

(UUID('5576789f-9306-4a82-8736-882db81abdf2'),

{'nodes': {'semantic_id': 'cundinamarca',

'category': 'region',

'attributes': {'name': 'Cundinamarca',

'relation_to_bogota': 'often thought of as part of'}}}),

(UUID('af6b4ebf-7c7c-4dd0-a58e-ab8c292eaf7c'),

{'edges': {'from_node': UUID('87517804-e5a2-44d9-82bd-23b4e33c2a40'),

'to_node': UUID('8ad7ae8e-ed3a-415f-8d0e-a8984bd7717e'),

'category': 'capital_of'}}),

(UUID('3265632a-4065-46c4-82cf-527b3d4abc13'),

{'edges': {'from_node': UUID('87517804-e5a2-44d9-82bd-23b4e33c2a40'),

'to_node': UUID('5576789f-9306-4a82-8736-882db81abdf2'),

'category': 'part_of'}}),

(UUID('d97d057d-2564-427d-9703-e77a61ff58c7'),

{'nodes': {'semantic_id': 'intracellular_fluid',

'category': 'fluid',

'attributes': {'name': 'intracellular fluid',

'volume': '2/3 of body water',

'amount_in_72_kg_body': '25 litres',

'percentage_of_total_body_fluid': 62.5}}}),

(UUID('380f506f-c2cf-453e-879f-fb58b3f3d1db'),

{'nodes': {'semantic_id': 'body_fluid',

'category': 'fluid',

'attributes': {'name': 'body fluid',

'total_volume_in_72_kg_body': '40 litres'}}}),

(UUID('1b0bebc1-49a1-419f-bfb2-d50cffeed740'),

{'edges': {'from_node': UUID('d97d057d-2564-427d-9703-e77a61ff58c7'),

'to_node': UUID('380f506f-c2cf-453e-879f-fb58b3f3d1db'),

'category': 'part_of'}}),

(UUID('6e5f08dc-fe95-4b74-884d-dcce8470290a'),

{'nodes': {'semantic_id': 'territorial_waters',

'category': 'geographic_area',

'attributes': {'name': 'territorial waters',

'definition': 'a belt of coastal waters extending at most 12 nautical miles (22.2 km; 13.8 mi) from the baseline (usually the mean low-water mark) of a coastal state',

'source': '1982 United Nations Convention on the Law of the Sea'}}}),

(UUID('443e77c0-cff1-43e4-89f2-ba748d4421a1'),

{'edges': {'from_node': UUID('6e5f08dc-fe95-4b74-884d-dcce8470290a'),

'to_node': UUID('d5019515-a9d9-4d23-89cf-dac81f7d96ea'),

'category': 'extends_from'}}),

(UUID('58b64eae-e6c2-47f1-8916-eaf1dcc87e6b'),

{'edges': {'from_node': UUID('6e5f08dc-fe95-4b74-884d-dcce8470290a'),

'to_node': UUID('11903999-15a7-4776-8bae-f1803429147f'),

'category': 'belongs_to'}}),

(UUID('7b534168-d2e7-498e-9115-5e21d6c638f3'),

{'nodes': {'semantic_id': 'territorial_sea',

'category': 'geographic_area',

'attributes': {'name': 'territorial sea',

'definition': 'a belt of coastal waters extending at most 12 nautical miles (22.2 km; 13.8 mi) from the baseline (usually the mean low-water mark) of a coastal state',

'sovereign_territory': True,

'foreign_ship_passage': 'innocent passage through it or transit passage for straits',

'jurisdiction': 'extends to airspace over and seabed below'}}}),

(UUID('d5019515-a9d9-4d23-89cf-dac81f7d96ea'),

{'nodes': {'semantic_id': 'baseline',

'category': 'geographic_feature',

'attributes': {'name': 'baseline',

'definition': 'usually the mean low-water mark of a coastal state'}}}),

(UUID('11903999-15a7-4776-8bae-f1803429147f'),

{'nodes': {'semantic_id': 'coastal_state',

'category': 'legal_entity',

'attributes': {'name': 'coastal state'}}}),

(UUID('7a93cd6e-7f97-43fa-9054-22d3e4478ecf'),

{'edges': {'from_node': UUID('7b534168-d2e7-498e-9115-5e21d6c638f3'),

'to_node': UUID('11903999-15a7-4776-8bae-f1803429147f'),

'category': 'belongs_to'}}),

(UUID('4dec6036-1299-4093-ae68-3b6cecc73053'),

{'edges': {'from_node': UUID('7b534168-d2e7-498e-9115-5e21d6c638f3'),

'to_node': UUID('d5019515-a9d9-4d23-89cf-dac81f7d96ea'),

'category': 'extends_from'}}),

(UUID('d86a7f75-df06-47f1-a30d-67a921d822bf'),

{'nodes': {'semantic_id': 'strait',

'category': 'geographic_feature',

'attributes': {'name': 'strait',

'sovereign_territory': True,

'jurisdiction': {'airspace': True, 'seabed': True}}}}),

(UUID('1baf2bf2-6083-481f-8980-d2f9793f58e6'),

{'nodes': {'semantic_id': 'maritime_delimitation',

'category': 'legal_concept',

'attributes': {'name': 'maritime delimitation',

'definition': "Adjustment of the boundaries of a coastal state's territorial sea, exclusive economic zone, or continental shelf"}}}),

(UUID('396cc35f-de5f-4717-9cb5-2cf511456fb2'),

{'edges': {'from_node': UUID('d86a7f75-df06-47f1-a30d-67a921d822bf'),

'to_node': UUID('11903999-15a7-4776-8bae-f1803429147f'),

'category': 'belongs_to'}}),

(UUID('88b88486-07be-4ae4-8d51-c2d88ca9f125'),

{'edges': {'from_node': UUID('1baf2bf2-6083-481f-8980-d2f9793f58e6'),

'to_node': UUID('11903999-15a7-4776-8bae-f1803429147f'),

'category': 'involves'}}),

(UUID('d8b37bae-dbdb-49c8-9e35-6c87c902f3f9'),

{'nodes': {'semantic_id': 'uninsured_depositor',

'category': 'stakeholder',

'attributes': {'deposit_amount': '>100,000 Euro',

'treatment': 'subject to a bail-in',

'new_role': 'new shareholders of the legacy entity'}}}),

(UUID('7862ab2f-0f92-48af-ba10-12cdac45a10f'),

{'nodes': {'semantic_id': 'bank_of_cyprus',

'category': 'organization',

'attributes': {'name': 'Bank of Cyprus',

'size': 'largest banking group in Cyprus',

'relation_to_cyprus_popular_bank': "absorbed the 'good' Cypriot part of Cyprus Popular Bank after it was shuttered"}}}),

(UUID('1c509f8b-300e-47ac-ad62-4dcf675ca11d'),

{'nodes': {'semantic_id': 'cyprus_popular_bank',

'category': 'organization',

'attributes': {'name': 'Cyprus Popular Bank',

'previous_name': 'Marfin Popular Bank',

'status': 'shuttered in 2013',

'size': 'second largest banking group in Cyprus',

'parent': 'Bank of Cyprus'}}}),

(UUID('82b3a659-7692-4909-a812-fc247f97ed6c'),

{'edges': {'from_node': UUID('d8b37bae-dbdb-49c8-9e35-6c87c902f3f9'),

'to_node': UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

'category': 'holds_deposits'}}),

(UUID('a80831eb-a3c9-4ad6-a6ba-9db107876050'),

{'edges': {'from_node': UUID('1c509f8b-300e-47ac-ad62-4dcf675ca11d'),

'to_node': UUID('7862ab2f-0f92-48af-ba10-12cdac45a10f'),

'category': 'merged_with'}}),

(UUID('2d393fc3-fe9b-46dd-90f7-2fadb227fccd'),

{'nodes': {'semantic_id': 'central_bank_of_cyprus',

'category': 'organization',

'attributes': {'name': 'Central Bank of Cyprus',

'role': 'Governor and Board members amended the lawyers of the legacy entity without consulting the special administrator'}}}),

(UUID('87a173a3-a32b-4a36-a1b2-6248a92eb14c'),

{'nodes': {'semantic_id': 'veteran_banker',

'category': 'stakeholder',

'attributes': {'name': 'Chris Pavlou',

'expertise': 'Treasury'}}}),

(UUID('7af74b0b-30ab-44c4-8adf-8e19ecf04a14'),

{'nodes': {'semantic_id': 'special_administrator',

'category': 'stakeholder',

'attributes': {'name': 'Andri Antoniadou',

'role': 'ran the legacy entity for two years, from March 2013 until 3 March 2015'}}}),

(UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

{'nodes': {'semantic_id': 'legacy_entity',

'category': 'organization',

'attributes': {'description': "the 'bad' part or legacy entity holds all the overseas operations as well as uninsured deposits above 100,000 Euro, old shares and bonds",

'ownership_stake': '4.8% of Bank of Cyprus',

'board_representation': 'does not hold a board seat',

'previous_operations': 'overseas operations of the now defunct Cyprus Popular Bank'}}}),

(UUID('bfe012be-e584-401f-bd34-f6d147e7831c'),

{'nodes': {'semantic_id': 'marfin_investment_group',

'category': 'stakeholder',

'attributes': {'name': 'Marfin Investment Group',

'role': 'former major shareholder of the legacy entity'}}}),

(UUID('14585bad-087d-4ce7-bbc2-1d89e4cd7548'),

{'edges': {'from_node': UUID('2d393fc3-fe9b-46dd-90f7-2fadb227fccd'),

'to_node': UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

'category': 'amended_lawyers_without_consulting'}}),

(UUID('14eb57de-1b12-42b5-8b91-945cdfd08442'),

{'edges': {'from_node': UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

'to_node': UUID('7af74b0b-30ab-44c4-8adf-8e19ecf04a14'),

'category': 'managed_by'}}),

(UUID('e367aa2a-74bb-426e-b17c-f4ecf2032e6f'),

{'edges': {'from_node': UUID('87a173a3-a32b-4a36-a1b2-6248a92eb14c'),

'to_node': UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

'category': 'took_over_as_special_administrator'}}),

(UUID('1c9504d1-c7e5-4b0b-ad7a-24a03cc9c498'),

{'edges': {'from_node': UUID('4845f0f8-9a9e-4bf2-b9e6-49ba7ee13b44'),

'to_node': UUID('bfe012be-e584-401f-bd34-f6d147e7831c'),

'category': 'pursuing_legal_action_against'}})])